Precogs AI Redefines Code Security: Topping the CASTLE Benchmark with AI-Native Precision

Case Studies

At Precogs AI, we’re not just building another static analysis tool—we’re redefining code security for the AI era.

Our platform is designed to understand, reason, and secure code in real time, and now we have the research to prove it.

Independent results from the CASTLE Benchmark—a rigorous, peer-reviewed evaluation framework—show that Precogs AI outperforms both Traditional code security tools and Leading large language models (LLMs) in accuracy, usability, and real-world effectiveness.

What is the CASTLE Benchmark?

The CASTLE Benchmark (CWE Automated Security Testing and Low-Level Evaluation) is a curated dataset of 250 compilable C programs, each containing a known vulnerability from the MITRE Top 25 CWEs. It evaluates:

- Code security tools (formerly SAST)

- Formal verification tools

- Large Language Models (LLMs)

The benchmark uses the CASTLE Score, a holistic metric that rewards high-impact vulnerability detection, penalizes false positives, and reflects real-world usability.

How Precogs AI Stacks Up?

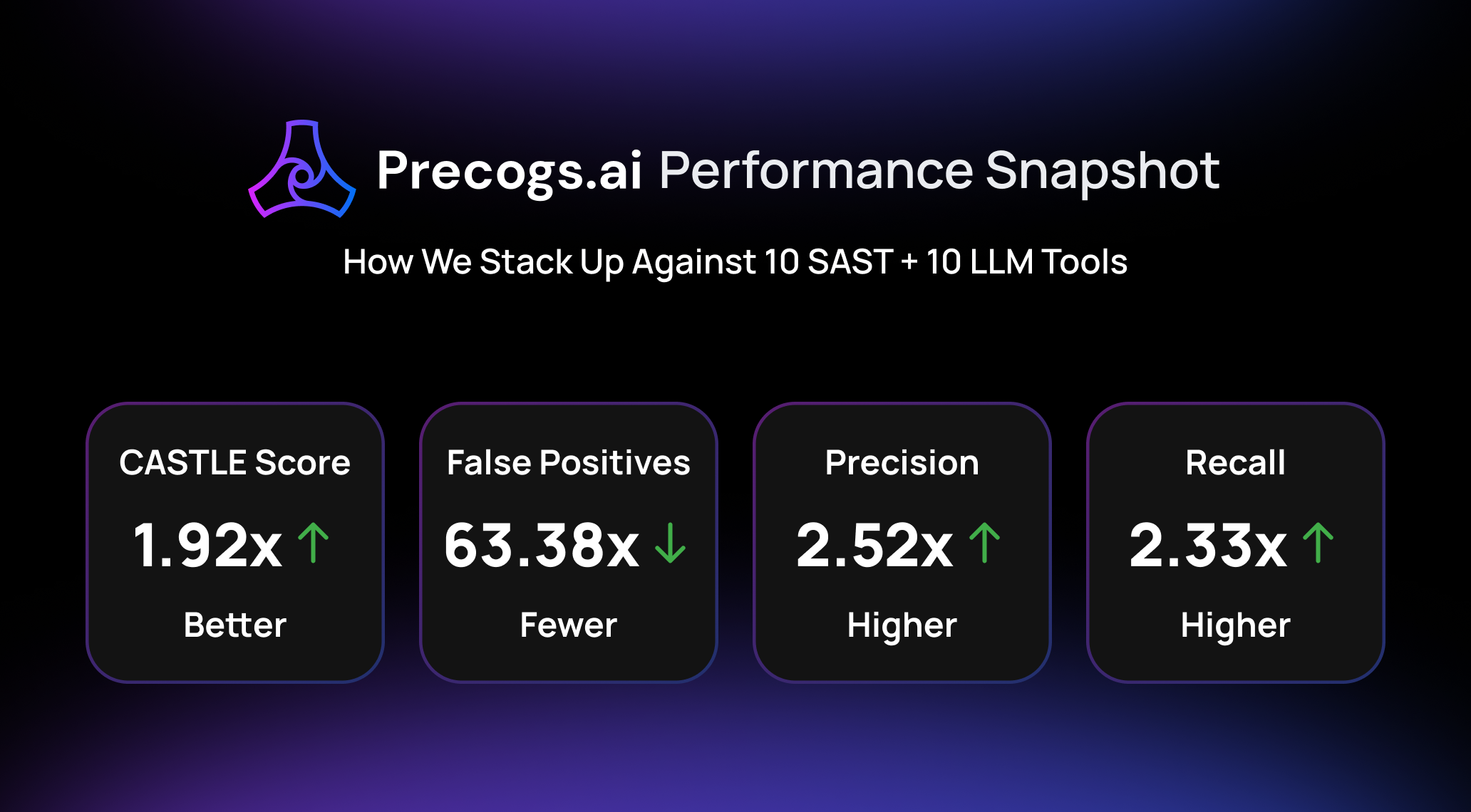

We evaluated our AI-native engine against 10 leading code security tools (including CodeQL, Snyk, SonarQube, and Coverity) and 10 state-of-the-art LLMs (including GPT-4o, DeepSeek R1, and Code Llama variants).

The results are clear:

CASTLE Score Comparison

| Category | No. of Tools | Avg Score | Precogs AI Score | % Improvement |

|---|---|---|---|---|

| Code Security Tools | 10 | 478.3 | 1145 | +139.4% |

| LLMs Tools | 10 | 713.8 | 1145 | +60.4% |

| Combined | 20 | 596.05 | 1145 | +92.0% |

Precogs AI's CASTLE Score of 1145 outperforms the average of all 20 tools combined by nearly 2x.

False Positives (Lower is Better)

Alert fatigue undermines security programs. We’ve eliminated the noise.

| Category | Total False Positives | Avg Per Tool | Precogs AI | Reduction |

|---|---|---|---|---|

| Code Security Tools | 717 | 71.7 | 2 | 97.21% fewer |

| LLMs Tools | 1818 | 181.8 | 2 | 98.90% fewer |

Our AI-native engine reduces false positives by orders of magnitude, so teams focus only on real risks.

Precision & Recall

| Metric | Code Security Avg | LLM Avg | Precogs AI |

|---|---|---|---|

| Precision | 39.50% | 38.20% | 98% |

| Recall | 18.70% | 62.00% | 94% |

We deliver near-perfect precision without sacrificing coverage—proving that AI can be both accurate and thorough.

Why AI-Native Code Security Matters

Traditional tools rely on rules and heuristics, leading to:

- High false-positive rates

- Missed contextual vulnerabilities

- Limited adaptability

LLMs bring pattern recognition but struggle with:

- Hallucinations and inconsistency

- Scalability in large codebases

- Structured, actionable outputs

Precogs AI is built differently. Our engine combines:

- Deep semantic understanding of code and context

- Neuro-symbolic reasoning for logical flaw detection

- Continuous learning from real-world security data

- Developer-friendly output that integrates into existing workflows

What This Means for Your Team

1. Shift Left with Confidence

Embed Precogs AI into CI/CD without alert fatigue or missed vulnerabilities.

2. Reduce Triage Time by 90%+

Reduce Triage Time by 90%+With 98.9% fewer false positives than LLMs, developers stay in flow.

3. Coverage That Actually Covers

Detect 94% of vulnerabilities across 25+ CWE categories, from memory safety to injection flaws.

4. Future-Proof Security

Our AI adapts as new vulnerabilities emerge—no more waiting for rule updates.

The Bottom Line

Security should accelerate development, not slow it down.

The CASTLE Benchmark confirms: Precogs AI delivers both accuracy and usability, outperforming legacy code security tools and general-purpose LLMs where it matters most.

To learn more about Precogs AI, its products, supported integrations, and key features, refer to the detailed blog here.